I'm On A Roll Today

I'm on a roll today

Tags

More Posts from Skelerose and Others

1 year ago

1 year ago

1 year ago

Studio: MADHOUSE – Birdy The Mighty (1996) Birdy’s Facial Expressions

1 year ago

Ars Technica

OpenAI could be fined up to $150,000 for each piece of infringing content.

Well, this would be interesting...

11 months ago

10 months ago

BARI BARI DENSETSU (バリバリ伝説) 1987 | dir. Osamu Uemura

Tags

1 year ago

A quick summary of the last 16 months

8 months ago

No i didn't "forget to pack a toothbrush and a phone charger" its called on site procurement. Solid snake does it too

1 year ago

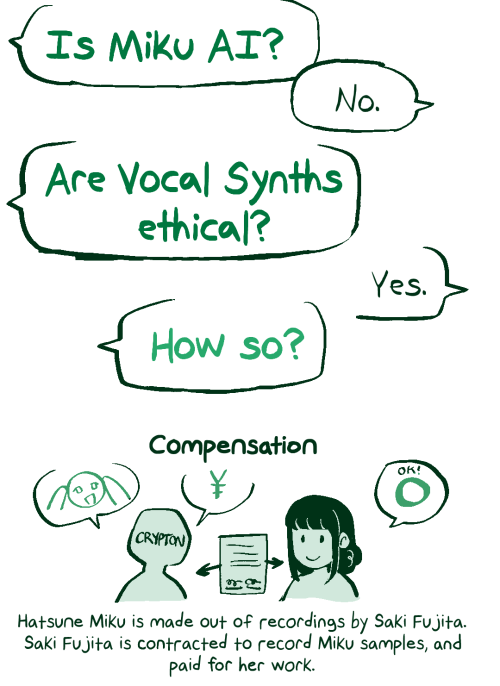

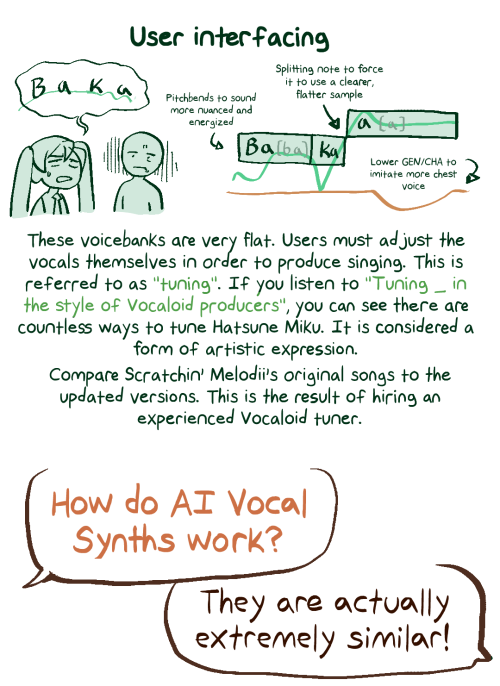

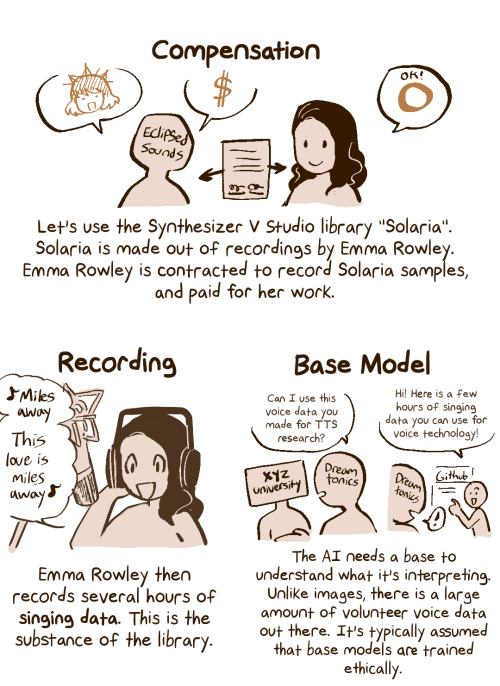

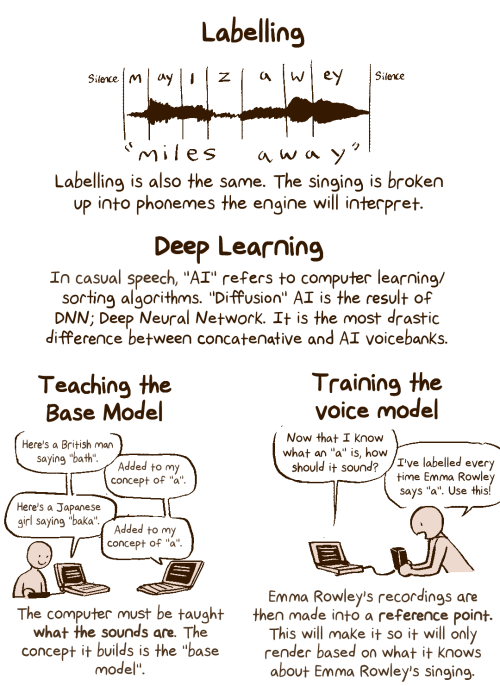

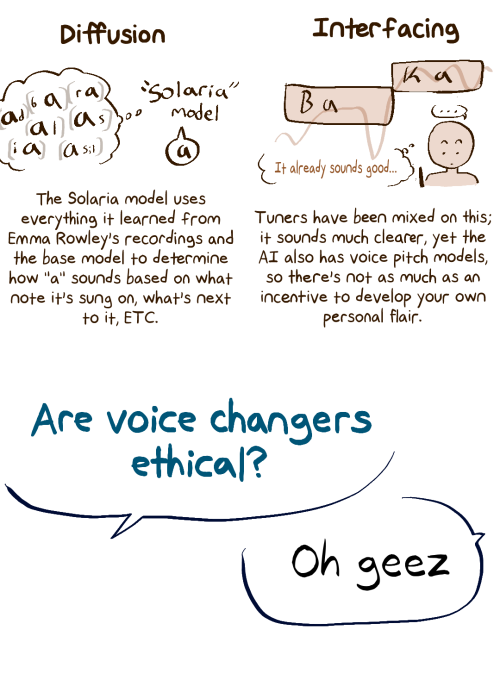

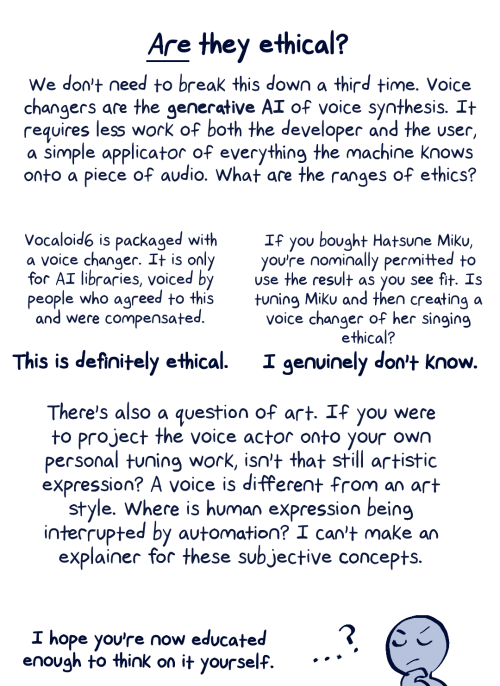

A lot of people try to explain this without knowing anything about how voice synthesis works, so here's my breakdown on No, Hatsune Miku Is Not AI, And No, AI Voice Synthesis Is Not Bad.

Loading...

End of content

No more pages to load

-

imaginationengine liked this · 4 months ago

imaginationengine liked this · 4 months ago -

no-words-for-this-one liked this · 7 months ago

no-words-for-this-one liked this · 7 months ago -

violetbeetle reblogged this · 7 months ago

violetbeetle reblogged this · 7 months ago -

skelerose reblogged this · 7 months ago

skelerose reblogged this · 7 months ago